When building a new gaming PC, one of the most important choices is your graphics card. The GPU is responsible for rendering all those stunning visuals, textures, and details that immerse you in your favorite game worlds.

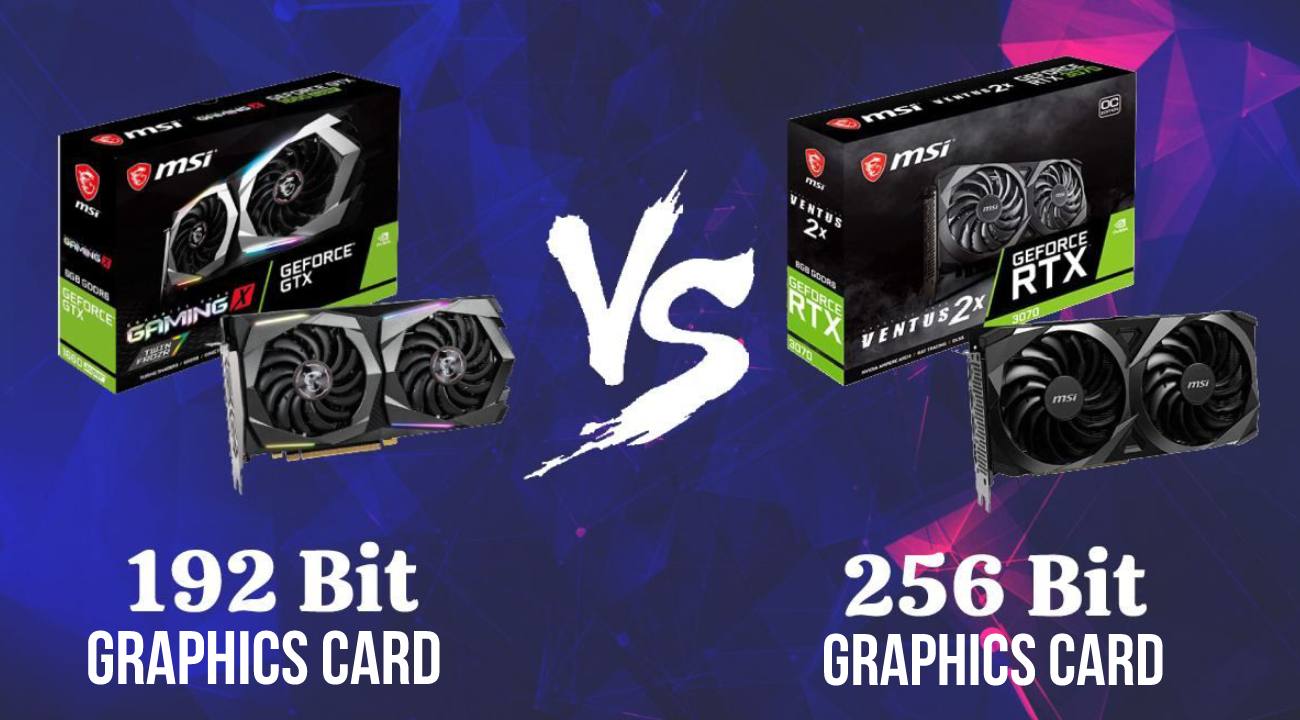

But with confusing specifications like “192-bit” and “256-bit” to parse, how do you know which graphics card to choose?

The bit-rate defines the transmission rate between the GPU processor and its VRAM (video memory).

A wider 256-bit interface compared to 192-bit allows higher potential memory bandwidth and data transfer speeds. This can provide up to a 10-15% fps boost in graphics-intensive games. However, a powerful 192-bit video card can still enable fluid 1440p or entry-level 4k gaming through optimized settings.

For example, Nvidia’s RTX 3060 offers a 192-bit memory bus and the RTX 3070 has a 256-bit bus. Both handle 1440p gaming very well over 60 fps on high settings. The 3070 sees a minor fps increase from its wider interface and bandwidth.

But I still recommend folks the 192-bit RTX 3060, because it’s almost $170 cheaper and can still drive modern titles smoothly.

What is GPU Bit Rate?

A graphics card’s bitrate indicates how much data can travel between the RAM and the GPU within each clock cycle.

But does GPU bitrate matter in real-life scenarios?

The answer is yes. With a higher bitrate, a GPU can transfer more data. Consequently, you can expect to get a better performance and higher-fidelity image.

192-bit and 256-bit graphics cards are some of the most commonly used components in the market. The main difference between them lies in the memory bus.

A 192-bit GPU can transfer 192 bits of data per clock cycle, and a 256-bit GPU can transfer 256 bits of data per clock cycle. Moreover, if we consider the RAM’s frequency to be constant, a 256-bit graphics card offers more bandwidth than a 192-bit GPU.

The GPU isn’t the only component affecting gaming PC builds. Your processor and its clock speeds also matter, especially when trying to maximize high refresh rate monitor performance.

In this article, we will dig into the differences between 192-bit vs 256-bit graphics cards so that you can make an informed decision about which one to go for. But before that, let’s see how you can check your graphics card’s bitrate.

How to Check Your Graphics Card Bit Rate?

If you are buying a new graphics card, you will find the bitrate information on the specifications list. The spec list is available on the manufacturer’s website, product description (on e-commerce sites like Amazon), and the package the GPU comes with.

However, if you want to check the bitrate of your installed graphics card quickly, follow the steps below.

- Download and install GPU-Z

- Run GPU-Z.exe program

- Check the “Bus Width” measure. It indicates your graphics card’s bitrate.

192 Bit vs 256 Bit Graphics Card: Major Differences

Now that you have an idea of what GPU bitrate is, why it matters, and how to check it, it’s time to dig deeper into the differences between 192-bit vs. 256-bit graphics cards.

Let’s get started.

Bandwidth

A graphics card’s bandwidth is the data transfer speed between the GPU and the core system through a bus. Gamers, graphics designers, video editors, app developers, and machine learning experts find higher bandwidth extremely useful.

The bandwidth of a graphics card is measured in Gbps. It is determined by the card’s bitrate and frequency of RAM. You divide the bitrate by 8 and then multiply it with the RAM frequency.

A 192-bit graphics card with 3,000 MHz RAM offers a bandwidth of 72,000 Mbps or 72 Gbps. However, a 256-bit graphics card with the same RAM frequency provides a bandwidth of 96,000 Mbps or 96 Gbps.

Therefore, with a 256-bit graphics card, you get better performance and image quality, ceteris paribus. But a 192-bit graphics card with a higher frequency RAM will perform better than a 256-bit card with a lower frequency RAM.

Performance

When it comes to bitrate, the performance of a graphics card depends on bandwidth, as we have already mentioned. A higher bandwidth allows a graphics card to access data more quickly and run at its maximum potential. However, a lower bandwidth can bottleneck a GPU’s performance sometimes.

Since every GDDR memory die takes a 32-bit memory bus, a 256-bit GPU will use a larger number of memory dies than a 192-bit GPU. Its impact on performance depends on the graphics card’s use cases.

For example, if you want to play a high-end game with a lot of textures, the 256-bit GPU will perform better. But if you use a 192-bit version of the same GPU for the same game and settings, you will get a lower performance.

Overclocking

A graphics card’s bitrate cannot be overclocked like a processor. The bitrate is fixed, due to the number of pins available on the CPU. Hence, you cannot overclock a 192-bit graphics card to upgrade it to a 256-bit graphics card.

However, you can increase the memory bandwidth by overclocking the card’s RAM frequency.

As the bandwidth is derived from bitrate and RAM frequency, an increase in RAM frequency can boost the performance of a graphics card.

Therefore, even if you end up with a 192-bit GPU, you can make it perform like a 256-bit GPU, as the RAM frequency or memory clock makes up for it.

Here, you may want to consider the return on your investment. Overclocking your RAM frequency will cause a greater impact on a 256-bit GPU than on a 192-bit GPU.

For example, if you overclock a 3,000 MHz RAM frequency by 128 MHz, a 192-bit GPU’s memory bandwidth will increase from 72Gbps to more than 75Gbps.

In this case, a 256-bit GPU’s memory bandwidth will increase from 96Gbps to over 100Gbps. Whereas overclocking a 192-bit GPU offers a 3Gbps boost, overclocking a 256-bit GPU offers a 4Gbps boost.

Therefore, if your budget allows, it’s better to get a 256-bit GPU.

Applications

Both the 192-bit and 256-bit graphics cards can smoothly handle basic computer tasks and intermediate-level graphics works.

However, if you want to play games with high graphical presets, maxed-out details, tons of textures to render, at very high resolution, it is better to use a graphics card with 256-bit or higher.

Similarly, if the use of the card includes high-end graphics designing, a 256-bit GPU will perform better than a 192-bit GPU.

What Are Some Other Factors That Affect GPU Performance?

While memory bandwidth plays a key role, several other aspects impact real-world graphics card performance:

- GPU Cores – More CUDA or stream processors allow a GPU to render graphics and execute tasks faster in parallel. High-end GPUs like RTX 3080 have around 8,000+ cores.

- Clock Speed – Measured in MHz, this indicates the operating frequency of the GPU core and memory. Higher is better for gaming fps. Graphics cards use boost clocks to dynamically overclock when thermal limits allow.

- Cache Memory – Specialized fast memory integrated into the GPU die acts as a data reservoir, reducing trips to the VRAM. Recent GPUs include more cache for performance gains.

- Cooling Design – Advanced cooling solutions with vapor chambers, heat pipes, and multiple fans enable cards to sustain boost clocks longer before throttling. This maintains fps stability during lengthy gaming sessions.

- Power Delivery – Robust VRM circuits with additional power phases provide clean and stable power to push overclocking potential. High-end cards need an immense power draw of 300W+ for peak operation.

- Drivers – Well-optimized graphics drivers and firmware updates from AMD or Nvidia can boost performance in games or creative applications via software-side efficiency improvements.

When choosing a graphics card brand, Nvidia and AMD are the two main options. Third-party manufacturers like EVGA also offer custom Nvidia card designs with potential performance advantages.

Final Verdict

192 Bit and 256 Bit Graphics Card: Which One is Better?

The short answer is – When all other factors are constant, a 256-bit graphics card will perform better than a 192-bit graphics card. A 256-bit GPU processes more data at once renders graphics faster and generates better image quality at a high resolution.

However, this performance difference does not solely depend on bus size or bitrate. Instead, it depends on bandwidth. Factors such as RAM speed and the number of RAM modules also matter. That’s why, when buying a graphics card, focus more on the memory bandwidth rather than the bitrate.

Frequently Asked Questions

Does 192-bit vs 256-bit matter for 4k gaming?

For 4k gaming, a 256-bit or higher bus width GPU can provide noticeably smoother frame rates and visual fidelity compared to 192-bit cards in the same performance segment. The additional memory bandwidth helps transfer textures and geometry faster at higher 3840 x 2160 rendering loads.

Is a 256-bit card always faster than 192-bit?

No. If other aspects like core clocks are lower, a 192-bit card can outperform an older 256-bit model. The interface width is just one piece of the puzzle.

What bandwidth should I target for 1440p or 4K gaming?

For smooth 60+ fps 1440p gaming, a memory bandwidth of at least 320 GB/s is recommended from the GPU+memory combination. For basic 4K, aim for 400 GB/s bandwidth.

What bitrate do pro graphic designers need?

Professional 3D modelers, animators, video editors, and effects artists working with 8k footage or complex multi-layered projects require high-bandwidth GPUs like the Nvidia RTX A6000 48GB or AMD Radeon Pro W6800 32GB for optimal workflow efficiency. These pack 384-bit and 256-bit interfaces, respectively.

Will next-gen games require more than 256-bit interfaces?

Potentially in the future as resolutions continue climbing. But in the near-term, 256-bit plus technologies like DirectStorage API will prevent bandwidth bottlenecks.