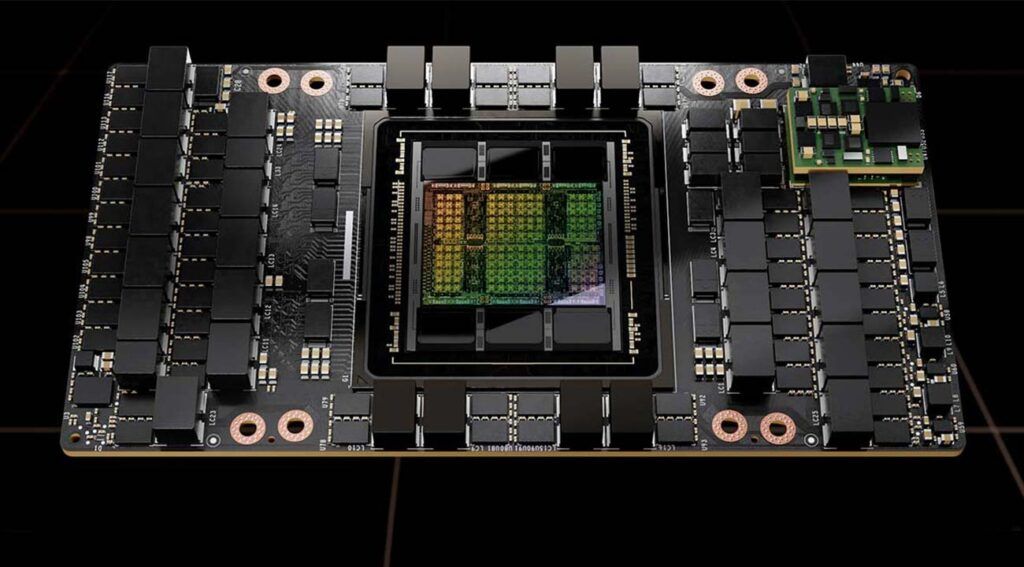

NVIDIA has just unveiled their Nvidia H100 GPU at the latest GPU Technology Conference and it is looking pretty impressive. Although plenty of rumors surrounding the GPU’s development was already seen on plenty of forums, now we have the concrete spec sheet.

So, in this article, we will be breaking down the specs and details surrounding the upcoming Hopper H100 GPU to give you a brief idea of what to expect from it. So without further ado, let’s dive in.

Whats in Nvidia Hopper H100 GPU?

Designed for Supercomputers, H100 focuses more on AI capabilities to streamline performance and work efficiency even further. Built on Custom 4 Nanometer TSMC Node, H100 packs a whopping 80 billion transistors which is a huge leap from A100’s 54 billion that currently exists on the market. Although no core count or clock speed has been teased yet, They did mention the H100 supporting PCIe 4.0 NVLink interface that ensures speed of up to 128GB/s. You can also expect a similar bandwidth for PCIe 5.0 systems that don’t use NVLink.

Keep in mind that this GPU supports HBM3 memory of 80GB with 3TB/s of bandwidth right out of the box. This is significantly higher, about 1.5 times to be precise compared to the A100’s HBM2E memory.

Consequently, these major upgrades enable the H100 to deliver up to 1000 Tera Flops of FP16 Computing, 500 of TF32, and 60 of General Usage FP64 computing.

While it all seems like huge improvements, there are some downsides too. Despite being based on a smaller node, the H100 has a TDP rating of up to 700 Watts. Whereas, the A100 SXM used to do just about right with 400 Watts.

Final Words

Nevertheless, we are still looking at about a 75% performance gain on the H100 GPU over its predecessor. These upgrades will brilliantly enhance supercomputing and AI workflows resulting in precise engineering and scientific work. There is no official release date yet, however, NVIDIA Plans to equip systems with the H100 GPU by Quarter 3 of 2022.